|

April 10, 2007 — The computation of optic flow is an essential step in many

computer-vision applications. The optic-flow field provides information about ego-motion, depth, and

independently moving objects. Most optic-flow algorithm accurately compute velocity estimates at

illumination edges, however, the results for image areas of constant illumination are usually poor.

To overcome this limitation, global strategies for optic-flow computation need to be employed.

We developed a novel technique for the computation of optic flow based on constructive interference

of global Fourier components. Since this method does not employ local windowing and thus uses all frequencies

in the computation, velocities for areas of constant illumination can be derived.

The visual scene observed by a camera can be represented as a three-dimensional distribution of intensities.

In the algorithm, the image sequence is decomposed first into translating gratings, the Fourier components,

which move with velocities determined by their spatial and temporal frequencies. The combined movements of the

gratings contributing to a particular point of the image sequence determine the local velocity at this point.

A voting scheme combining both intersection of constraints and the principle of constructive interferences is

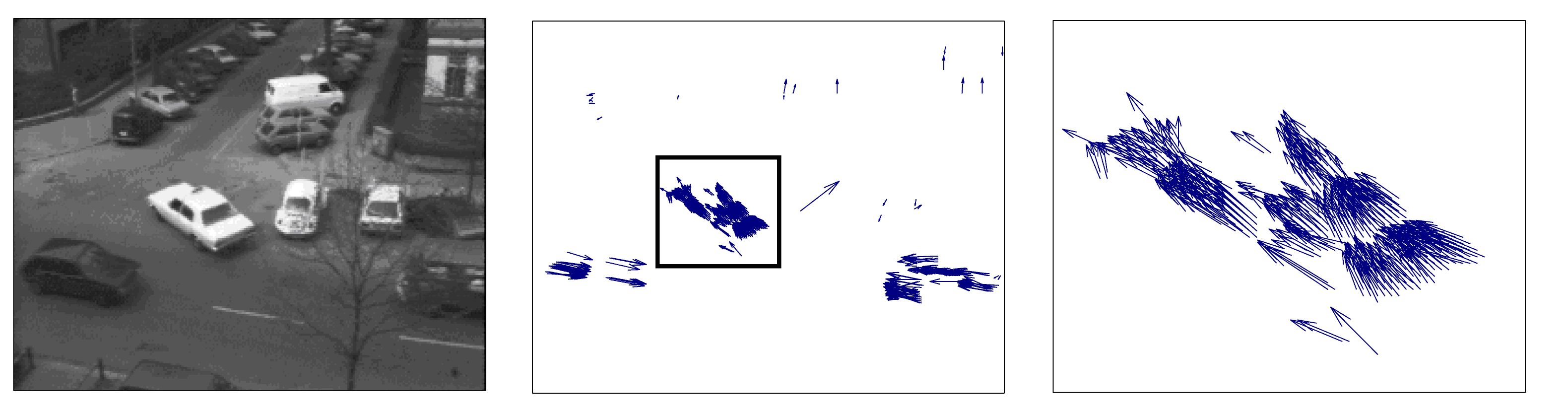

employed to estimate the local velocities. In Fig. 1, the results of the algorithm for two image sequences are

shown.

The method opens a new perspective on the computation of optic flow and on the interference of local measurements from

global signals in general. In the future, pre-segmentation of the image sequence could be employed to improve the

optic-flow computation at image regions of intensities which are close to the average intensity of the image.

Fig. 1: Estimated optic-flow fields of two real image sequences. The taxi sequence shows a scene containing a taxi

turning the corner, a car driving from the left to the right, a truck driving from the left to the right, and a

pedestrian walking along the sidewalk in the upper left corner (upper left panel). The estimated optic-flow field

is shown in the upper middle panel. The enlarged inset (black square) containing the taxi is presented in the upper

right panel. The next scene shows a person walking from the right to the left (lower left panel). The estimated optic

flow field and an enlarged inset containing the legs are presented in the lower middle and right panel, respectively.

Using only motion stream for detection of IMOs leads to discontinuity and

sparseness of IMOs representations. Recognition stream deals with static

images and does not use the temporal information. It means that none these

streams alone can provide satisfactory quality of the final IMOs detection.

Besides, the idea of the two processing streams is widely accepted and

supported by visual neuroscience.

Segmentation of image sequences using superparamagnetic clustering

Image segmentation provides an important first step in image analysis.

On the segmented parts of the image(s), further analysis steps can be performed, including object identification

and recognition. For many tasks, i.e., depth computation, object tracking, and 3D reconstruction, we would wish

that the segments, or objects, carry information about depth and 3D velocity.

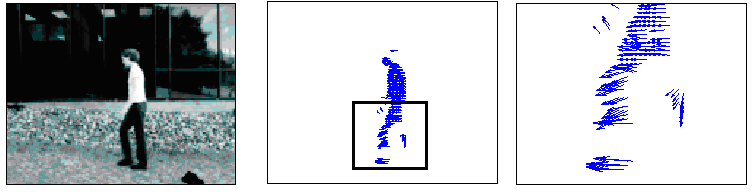

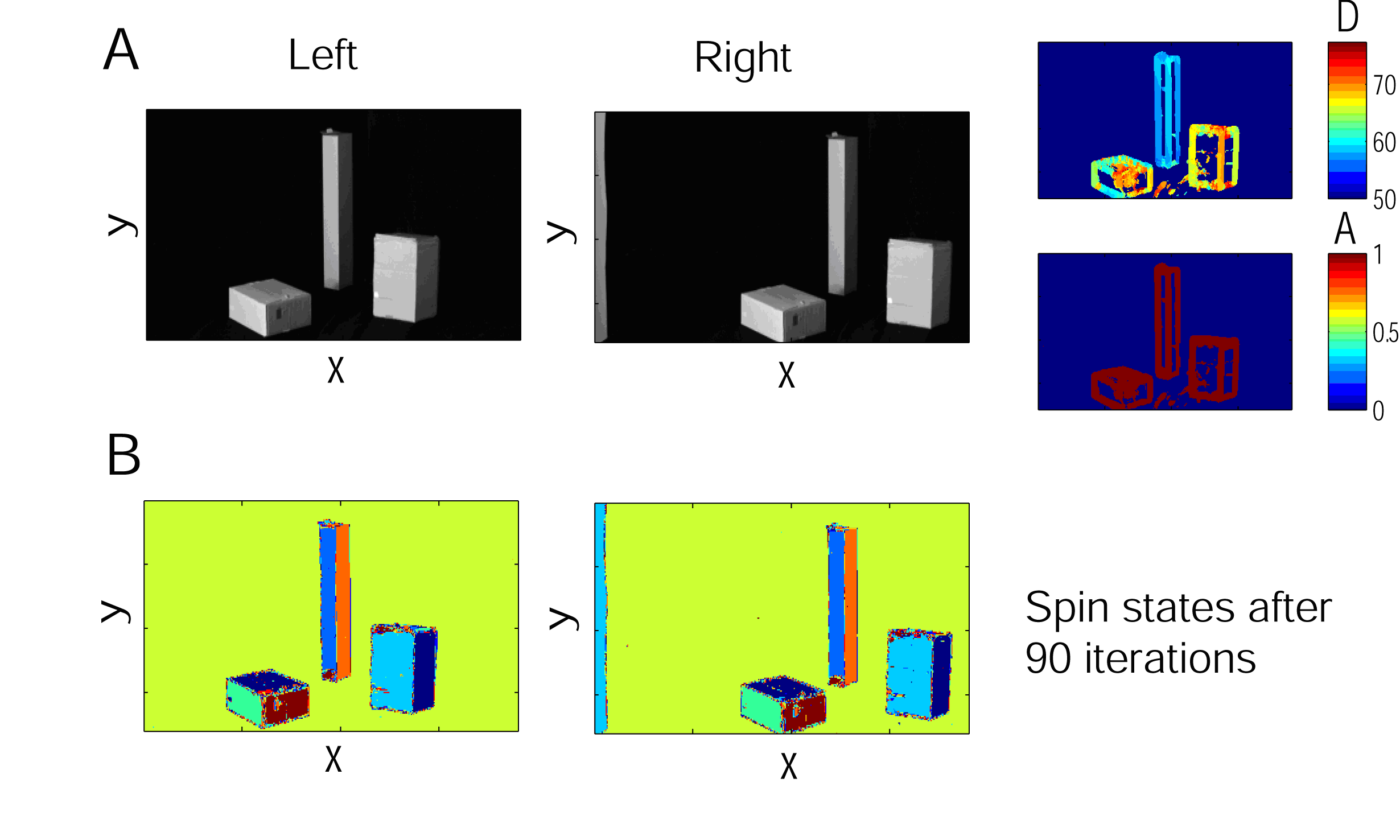

We developed an algorithm based on superparamagnetic clustering for segmenting 3D

objects from image sequences, such as stereoscopic objects or moving objects. Neighborhood relationships across

frames necessary for the clustering process are established by means of disparity or optic-flow maps from standard

stereo or optic-flow algorithms. Segmentation results of the algorithm for a stereo image pair and a motion sequence

are shown in Fig.2 and Fig.3, respectively.

Fig. 2: Finding corresponding image regions. A Two different views, left and right, of a scene consisting of several

paper boxes (left and middle panel) are shown. The respective disparity and amplitude maps are shown in the upper and

lower right panel. B The spin states after 90 iterations are depicted. Image points which belong to the same image

segment (or object) are in the same spin state. The reverse is not true, since the spin states are not identical with

the cluster labels.

The 3D segmentation algorithm provides a framework for combining different object cues, here, gray-level similarity,

disparity, and optic flow, to arrive at first representations of objects. Most importantly, the algorithm gives

reliable and stable results even if the disparity or optic-flow maps are largely incomplete or afflicted with errors.

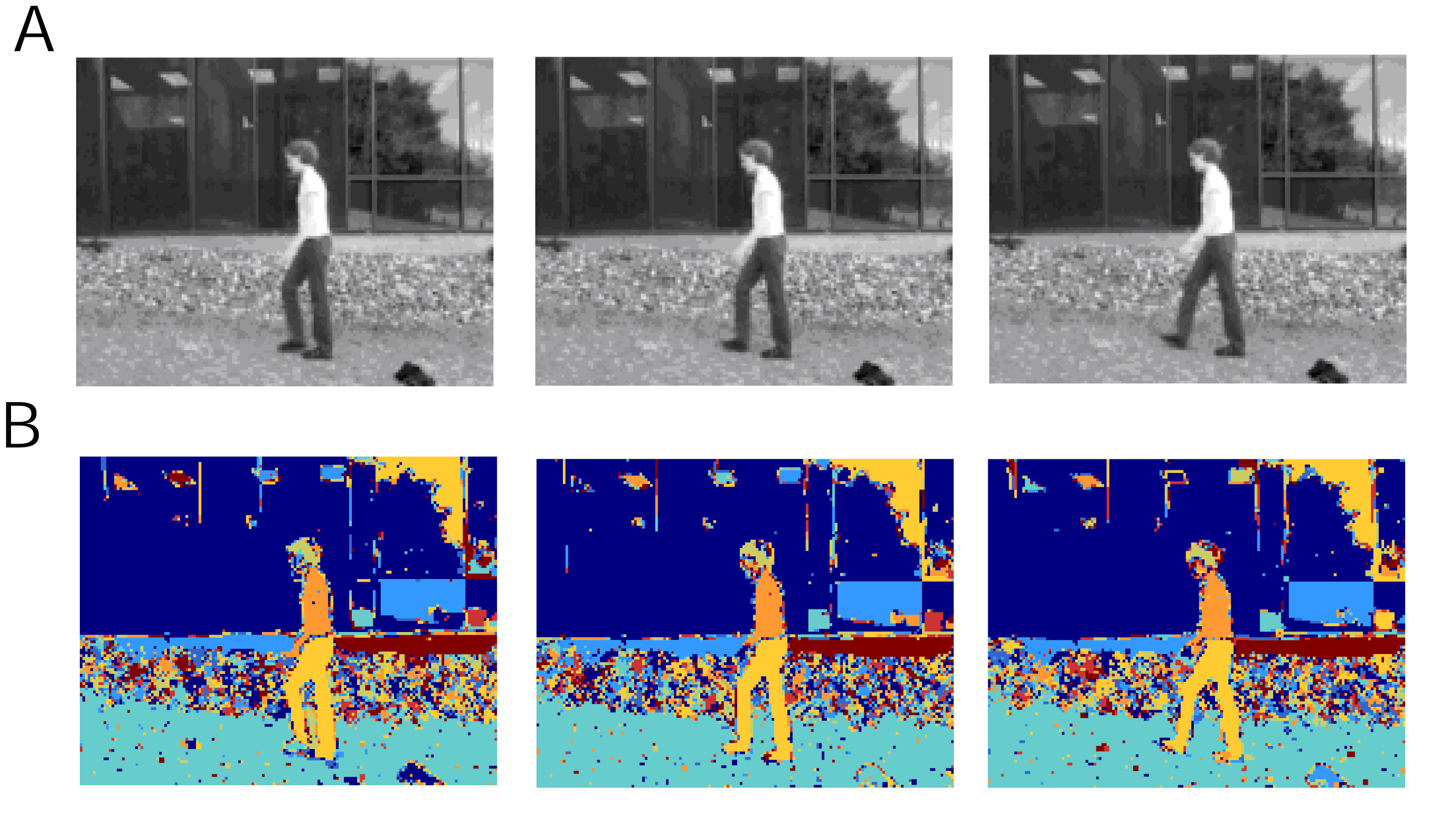

Fig. 3: Object tracking. A woman walking from the right to left is depicted. B The spin states after 94 iterations

are shown. Stable 3D clusters have formed, i.e., the legs, the upper body, a part of the head. The 3D clusters can

now be tracked across frames.

Babette Dellen

Florentin Woergoetter

Department of Computational Neuroscience, Georg-August-University Göttingen

|