|

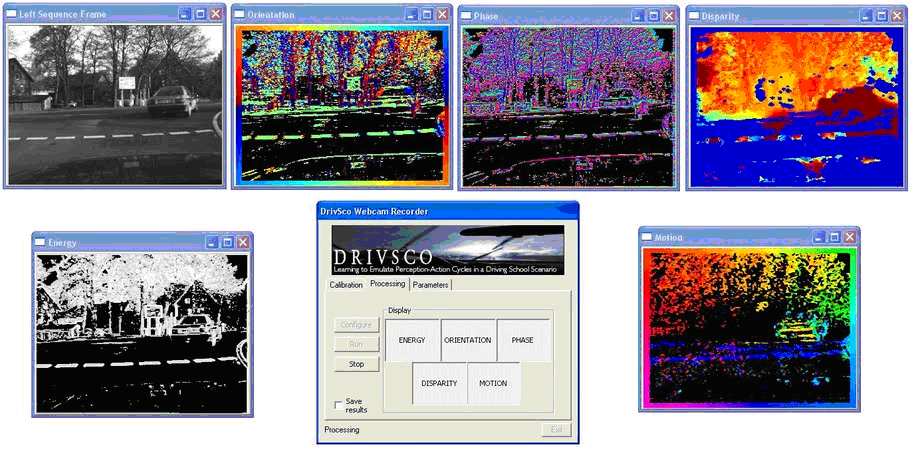

December 11 2009 — We have developed a low level processing engine for vision applications. It is composed by customized hardware modules for optical flow, stereo o local image features computation (local energy, orientation and phase evaluated at different image scales). In addition, we have integrated embedded processors based on Power-PC cores. It allows to controlling and accessing the hardware modules using standard C or C++ code. Linux-based OS are also supported.

Specific architectures for different low level vision modalities have been developed and described using reconfigurable hardware. Each of them tries to solve a single low level vision problem: optical flow, disparity, segmentation, tracking, etc. According to the requirements of DRIVSCO project, the group of Eduardo Ros at the University of Granada (http://atc.ugr.es/~eduardo) have developed a novel architecture that includes multiple processing engines in a massively parallel low level vision processing engine of very high complexity and performance. Our design is able to process input images and extract at the same time different visual features such as multi-scale stereo, multi-scale optical flow and multi-scale local contrast descriptors such as local orientation, energy or phase. We have based our system in a Harmonic filter image decomposition model based on Gabor-like filters that has been validated in multiple scenarios in previous works and that allows sharing hardware resources among different vision modalities on the same chip.

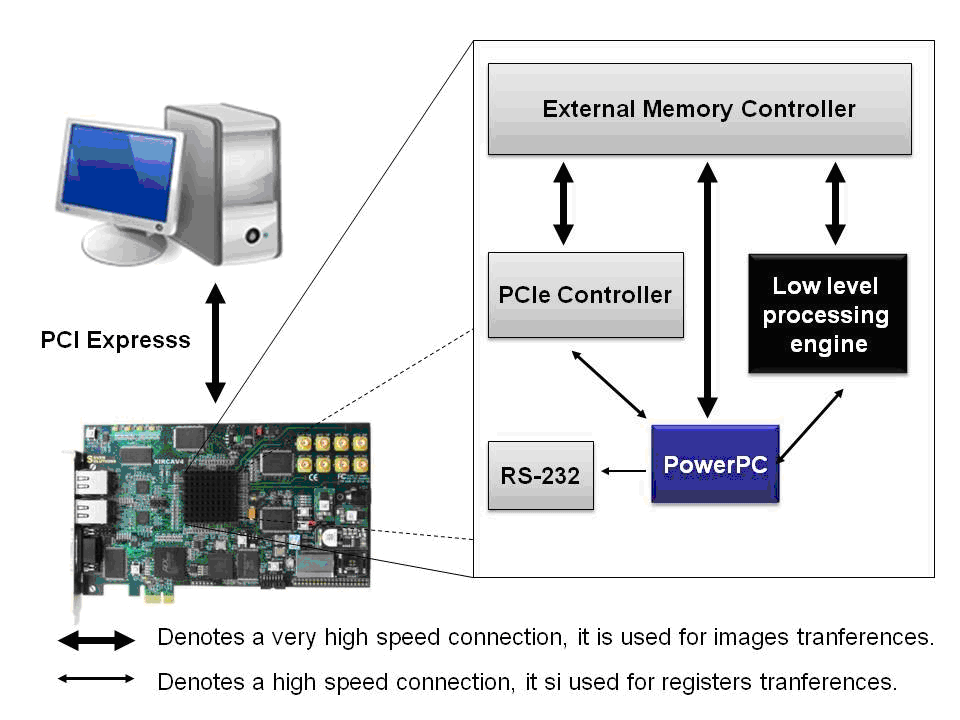

Our system runs on a Virtex-4 FX100 FPGA from Xilinx and uses the Xirca-V4 board from Seven Solutions (http://www.sevensols.com). The latest hardware design techniques have been employed in order to achieve the presented system with more than 2000 basic processing elements running in parallel that allows up 28 fps of images with 512x512 pixels resolution.Typical design issues that need to be taken into account in such an implementation process are the hardware resources utilization, the memory accesses, the data-paths synchronization, multiple clock domains handling, etc.

In addition, on-chip Power-PC processors have been added to our design to allowing software developers to controlling and accessing the low level vision cues. Stand-alone operation or Linux-based Operative System support is possible. The system architecture has been validated in the framework of heading estimation. Using standard software implementation running on the embedded processors and the model of Raudies and Neumann 2009, we are able to estimate egomotion information (translation and rotation) in a robust way at a frame-rate of more than 15 fps (using 15.000 optical flow estimations). Further performance is expected based on the development of coprocessor modules.

The final platform could be employed as coprocessor engine (using and standard PC and the developed user interface based on the PCI-Express interface) or as embedded system for mobile applications (such as the automotive one addressed at the DRIVSCO project). Future work will address the exploration of the platform possibilities for robotics applications as well as processing engine for much more complex vision algorithms.

Universitad de Granada

Departamento de Arquitectura y Tecnologia de Computadores,

E.T.S.I. Informatica,

|